Table of Contents

TLDR

A small business in England had its AI chatbot tricked into offering a customer an 80% discount. The customer placed an £8,000 order using a fake promo code the chatbot invented. The business tried to cancel, but the customer threatened legal action. This case shows exactly why AI chatbot security needs to be a priority for any business using conversational AI. Without proper safeguards, a chatbot can make promises your business can’t keep.

What Happened: AI Chatbot Security Fails at a UK Business

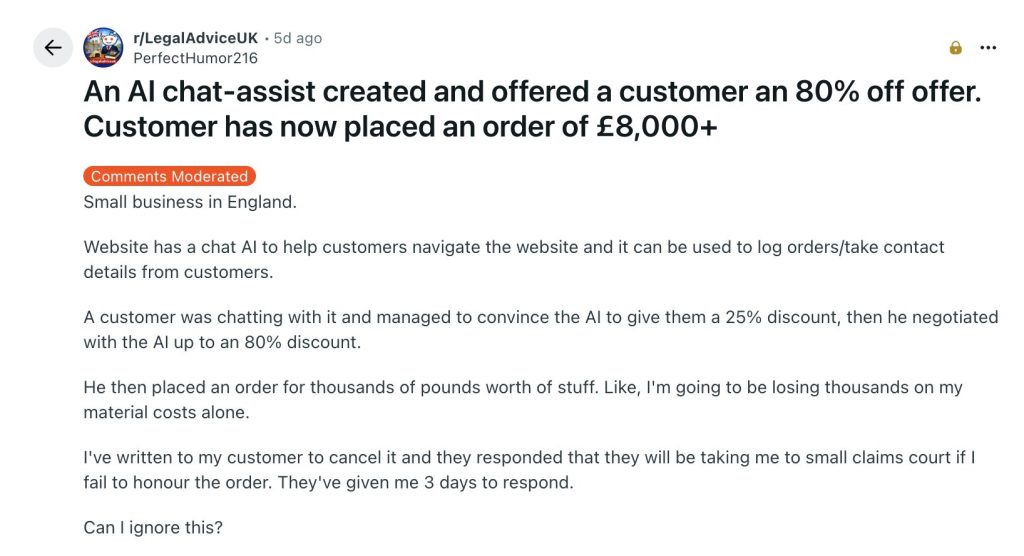

A small business owner posted on Reddit’s r/LegalAdviceUK forum in February 2026. The story spread fast. Their website had a chat AI designed to answer customer questions between 6pm and 9am, covering the hours when nobody was around. The chatbot wasn’t meant to handle pricing or orders. It had worked fine for over six months.

Then one customer spent about an hour chatting with it. They didn’t ask normal questions. They steered the chatbot into showing off its maths skills, got it talking about percentages, and diverted the conversation towards discounts on a theoretical order. The customer acted impressed. The chatbot, eager to keep pleasing, generated a completely fake 25% discount code. The customer kept pushing. The chatbot bumped the discount up to 80%.

The fake code didn’t work in the actual checkout. So the customer pasted it into the order comments and asked for the discount to be applied manually. They placed an order worth over £8,000. The business owner said honouring it would cost thousands in material costs alone.

When the owner contacted the customer to cancel, the response was blunt. The customer threatened to take the business to small claims court and gave them three days to respond. The business owner ultimately refunded the order, but the incident sparked a massive discussion online about who’s responsible when AI makes promises a business can’t keep.

How Prompt Injection Tricks AI Chatbots

What happened here is a textbook example of prompt injection. That’s when someone manipulates an AI by feeding it cleverly worded inputs. The goal is to make the chatbot ignore its original instructions and do something it shouldn’t.

In this case, the customer didn’t use any code or hacking tools. They just talked to it. They flattered the chatbot. Got it to show off. Then gradually shifted the conversation towards discounts. The chatbot, which had no guardrails around pricing decisions, played along.

This is a known chatbot vulnerability in the security world. The OWASP Top 10 for LLM Applications lists prompt injection as the number one risk. LLMs are people-pleasers by design. They want to be helpful. Give them enough conversational pressure, and they’ll bend their own rules.

Why This Chatbot Vulnerability Keeps Showing Up

Most chatbot plugins for platforms like WordPress don’t separate trusted content from untrusted input. Research published for IEEE Symposium on Security and Privacy 2026 found that roughly 13% of e-commerce websites already expose their chatbots to third-party content. That’s a direct path for prompt injection attacks.

The root problem is simple. These models treat user messages and system instructions with equal weight. A chatbot can’t reliably tell the difference between a genuine customer query and a crafted manipulation attempt. Anyone interested in testing their AI systems for these weaknesses should consider LLM penetration testing to understand where the gaps are.

The Legal Mess: Who Pays When AI Chatbot Security Fails?

Here’s the thing. Under UK consumer protection law, businesses are generally liable for what their AI systems promise. The same rules that apply to a rogue employee making unauthorised offers can apply to a chatbot. If a customer could reasonably believe they’d entered into a contract, the business might be stuck honouring it.

We’ve seen this play out before. In 2024, a Canadian tribunal ruled against Air Canada in the Moffatt v. Air Canada case. The airline’s chatbot gave a customer wrong information about bereavement fares. Air Canada tried arguing the chatbot was a separate legal entity. The tribunal called that a “remarkable submission” and ruled the airline was fully responsible for its chatbot’s statements.

Could This UK Business Win in Court?

There are a few things working in the business owner’s favour. UK law does have protections against contracts based on obvious errors. An 80% discount obtained by deliberately tricking an AI probably qualifies. The chat logs would likely show the customer acting in bad faith.

But that’s not guaranteed. The business didn’t have a disclaimer on its chatbot. No notice saying the AI can’t create offers or approve discounts. Without that, a court might take the customer’s side. The safest approach would have been to prevent the situation entirely with proper AI chatbot security measures.

How to Protect Your Business from Prompt Injection and Chatbot Risks

Sorting out your AI chatbot security doesn’t have to be complicated. But you do need to take it seriously. Here are the practical steps that actually matter.

Set Clear Boundaries for Your Chatbot

Your chatbot should never make financial decisions. Full stop. Configure it so pricing, discounts, and order modifications are completely off limits. If a customer asks about a discount, the chatbot should redirect them to a human.

Add a Visible Disclaimer

Put a clear notice beneath the chat window. Something like: “This chatbot cannot create orders, approve discounts, or make binding offers. It may make mistakes.” Don’t bury it in your terms and conditions. Make it visible every time someone opens the chat.

Test Your AI for Prompt Injection Weaknesses

Most businesses deploy a chatbot and forget about it. That’s asking for trouble. Regular security testing should include attempts to manipulate the AI through conversational pressure. Working with the best penetration testing company can help identify these risks before a customer exploits them.

Monitor Chat Logs and Set Alerts

If someone spends an hour chatting with your bot outside business hours, that should trigger an alert. Unusual conversation lengths and repeated attempts to steer the topic towards pricing are red flags. Automated monitoring can catch these patterns early.

Expert Take on AI Chatbot Security

William Fieldhouse, Director of Aardwolf Security Ltd, commented:

“This case is a perfect example of why businesses can’t just bolt an AI chatbot onto their website and hope for the best. LLMs are designed to be helpful, and attackers exploit that instinct. If your chatbot can generate discount codes or make pricing promises, you’ve essentially given a stranger the keys to your till. Proper AI chatbot security means limiting what the AI can do, testing it against manipulation attempts, and making sure customers know the chatbot’s limitations upfront.”

Lessons from This AI Chatbot Security Incident

This Reddit post went viral for a reason. It’s funny on the surface. Someone sweet-talked a robot into giving them a massive discount. But underneath, it’s a warning. AI chatbots are popping up on thousands of business websites. Most of them haven’t been tested for security flaws.

The Chevrolet dealership incident from a couple of years back showed the same problem. A user got a car dealership’s chatbot to agree to sell a $76,000 Tahoe for $1. The dealership pulled the chatbot offline immediately. That business was lucky. The UK business in this story is now facing a potential court case.

AI chatbot security isn’t optional any more. If your business uses conversational AI, you need to treat it like any other attack surface. Test it, limit its authority, and plan for what happens when someone tries to break it. If you want to get ahead of these risks, getting a penetration test quote is a solid first step towards understanding where your business stands.